Adaptive Infrastructure: Building Systems That Survive AI

·

8 min read

Table of Contents

Your CI pipeline was designed for a different threat model.

AI is now a material contributor to production code. GitHub reports 41% of new code is AI-generated. Qodo found that when AI code review is enabled, 80% of PRs receive zero human comments. And CodeRabbit’s analysis shows AI-generated code carries 1.7x more bugs than human-written code.

That’s the landscape. Not hypothetical — measured.

But the real problem isn’t the bugs. It’s that the bugs are structural, and the infrastructure most teams rely on was designed to catch a completely different kind of failure.

AI Fails Differently Than Humans

CI pipelines are extremely good at catching the kinds of mistakes humans make — syntax errors, type mismatches, failing tests. AI doesn’t make those mistakes. AI passes your linter, passes your type checker, passes your tests. It writes code that looks correct, reviews well in a diff, and creates compounding problems you won’t notice for months.

GitClear analyzed 211 million lines of code and found an 8x increase in duplicated code blocks during 2024 — the first year AI became a material contributor. Code churn rose from 3.1% to 5.7%. Copy-paste exceeded refactoring for the first time. Google’s DORA report found that every 25% increase in AI adoption correlated with a 7.2% decrease in delivery stability, even as 75% of developers reported feeling more productive.

Read that again: developers felt faster while delivery got worse.

This isn’t a tooling problem. It’s a systems design problem.

The Widening Gap

Here’s what concerns me most: model capability is on an exponential curve. Infrastructure improvement is linear. Every model release widens the gap.

In early 2025, METR ran a randomized controlled trial with 16 experienced open-source developers. Using AI tools (Cursor Pro with Claude 3.5/3.7), developers completed tasks 19% slower than without AI — despite believing they were 20% faster. Acceptance rates for AI suggestions were below 44%. The time spent verifying and correcting AI output ate the productivity gains.

Fast forward twelve months. By early 2026, Opus-class models one-shot physics engines, coordinate multi-million-line migrations, and run headlessly in agent teams for hours. The METR study tested two-hour tasks with early models. We’re now living with agents that ship entire features autonomously.

The infrastructure that was barely adequate for Sonnet 3.5 is catastrophically inadequate for Opus 4.6 agent teams. And yet most organizations are still running the same CI pipelines they had in 2023.

Adaptive Infrastructure: A Framework

You don’t need better AI tools. You need adaptive systems — for your codebase, your process, and your culture — that evolve as fast as the models do.

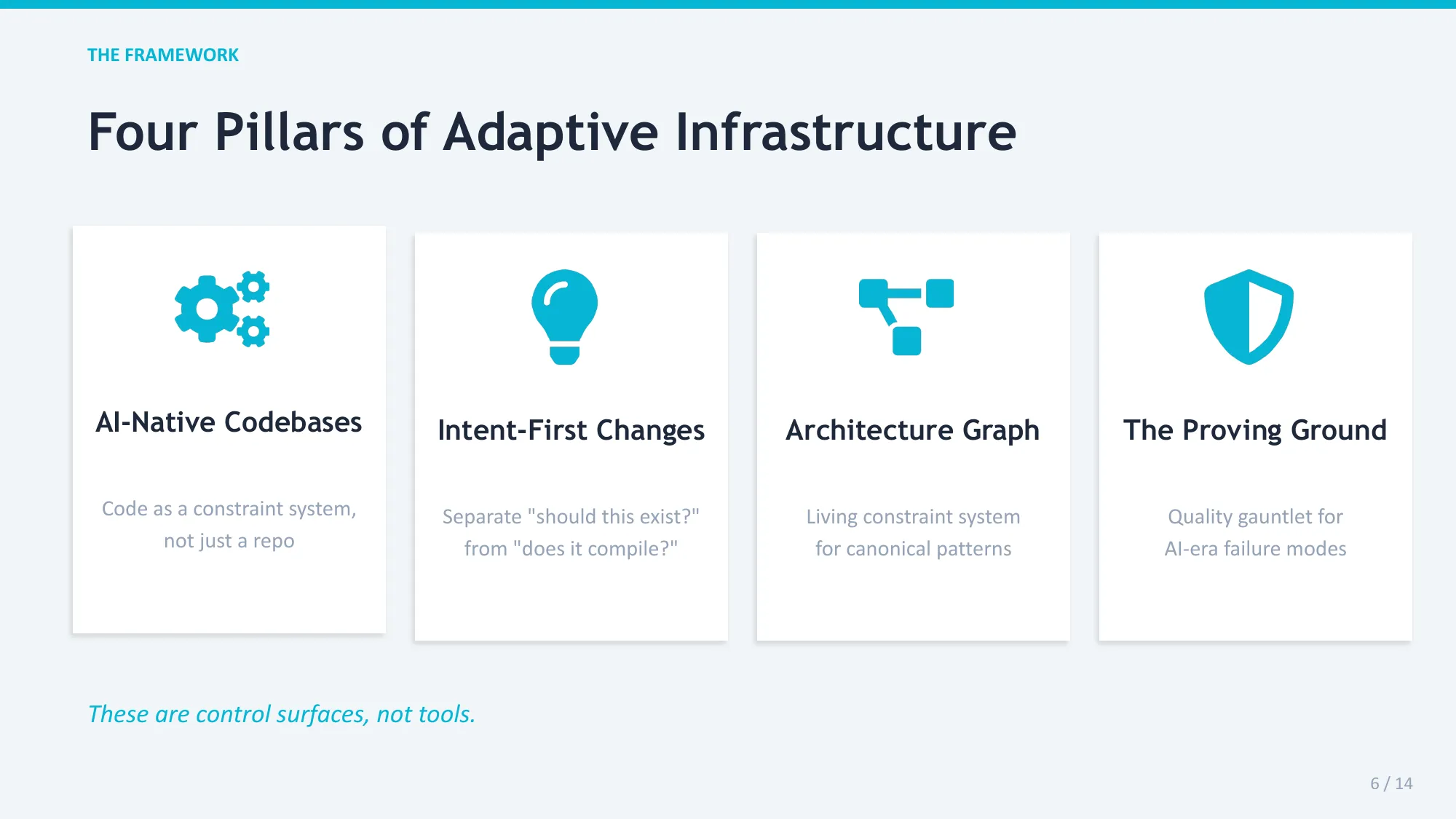

I’ve been building and thinking about this problem for the past year, both through my own engineering work and through conversations with teams across the industry. What follows is a framework organized around four control surfaces. Not products to buy. Design principles to implement.

1. The Proving Ground

A quality gauntlet designed for AI-era failure modes.

AI introduces failures CI was never built to detect: pattern drift (three correct implementations where one should exist), hallucinated dependencies (imports that look plausible but don’t exist), security surface expansion (more code means more attack surface, unchecked), and hollow test suites (tests that pass but assert nothing meaningful).

The Proving Ground isn’t a bigger test suite. It’s a quality firewall. The goal isn’t to block AI code — it’s to force it to prove coherence, not just correctness.

Quality policies expressed in natural language, evaluated by AI reviewers. Checks that evolve from production incidents — every post-mortem becomes a new rule. Test quality that’s scored and gated, not assumed.

Code must prove it deserves production.

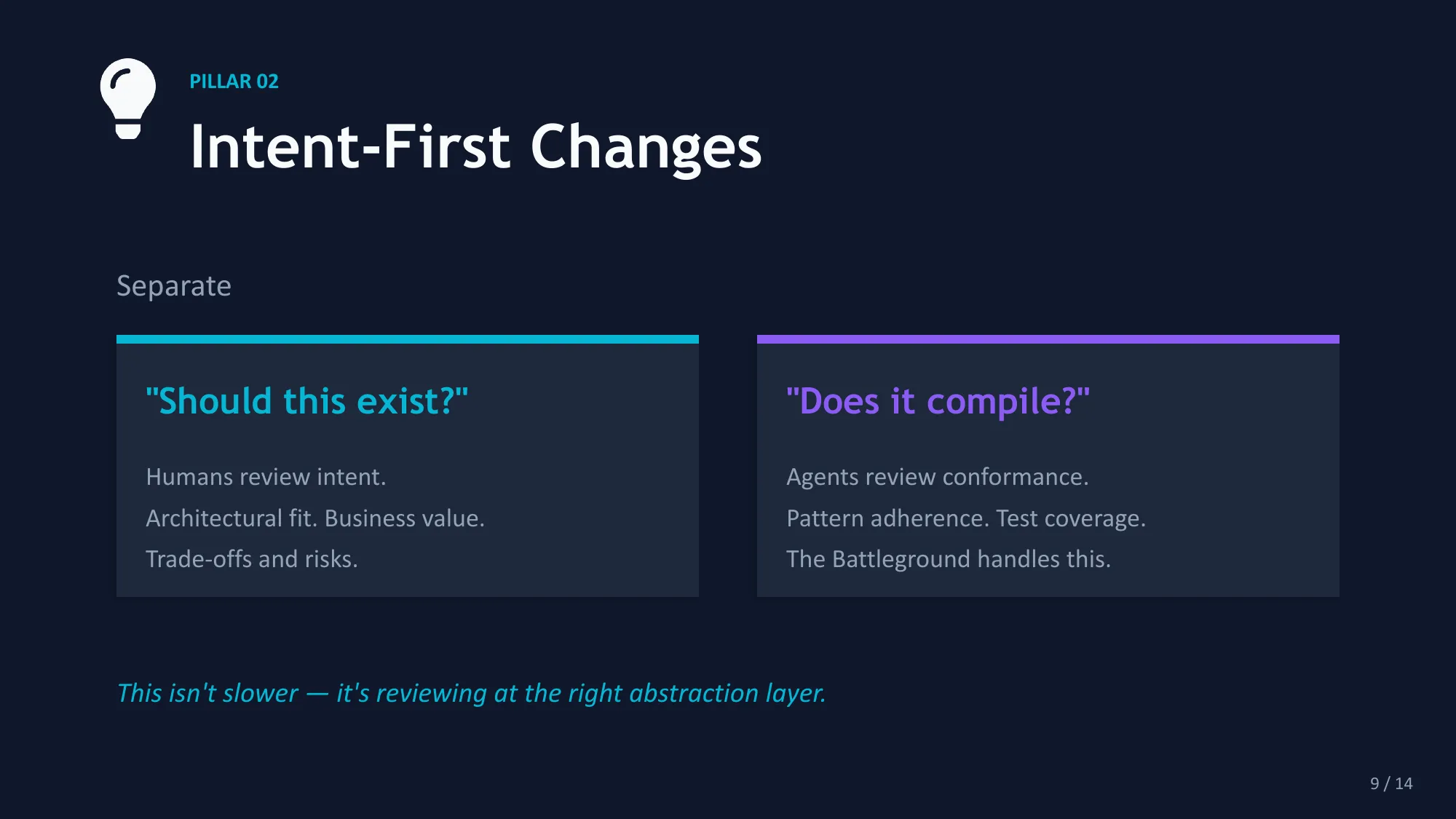

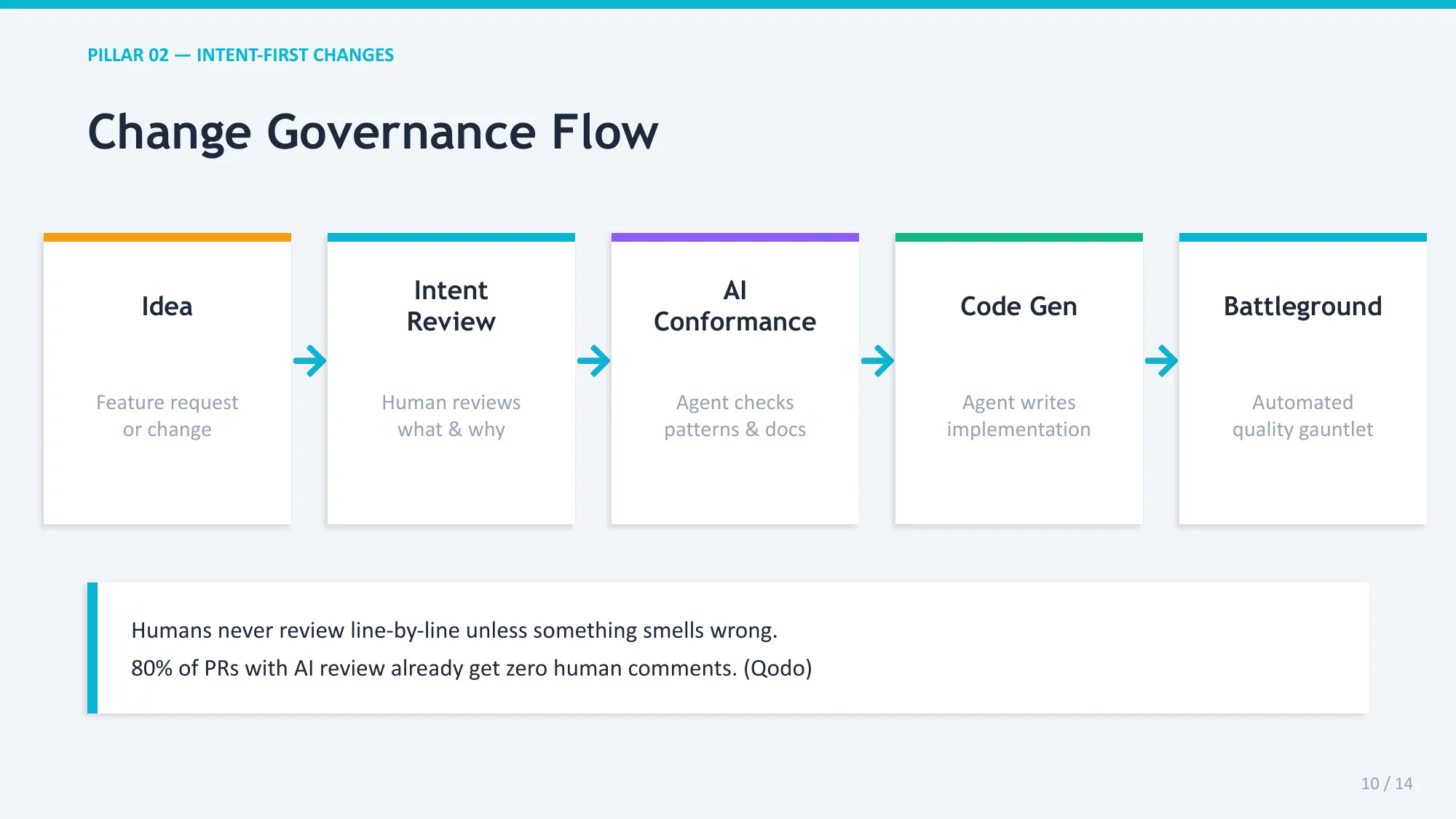

2. Intent-First Changes

Separate “should this exist?” from “does it compile?”

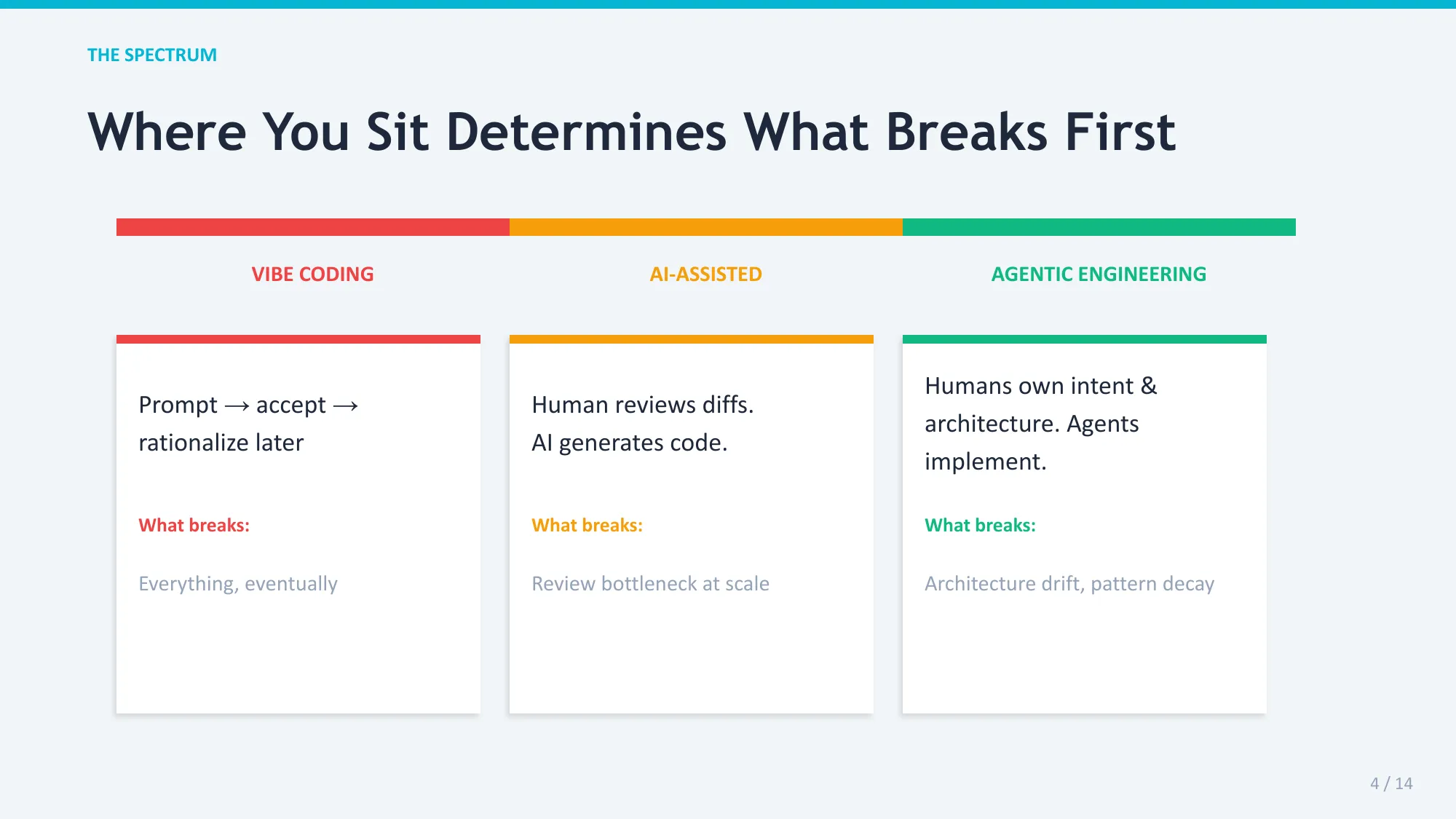

When AI writes the implementation, humans should review intent — architectural fit, business value, trade-offs. Agents should review conformance — pattern adherence, test coverage, documentation.

This isn’t slower. It’s reviewing at the right abstraction layer.

OpenAI’s Codex team already works this way: agent-to-agent review workflows where humans review intent, not implementation. As one developer put it: “We didn’t remove work from software delivery, we moved it.” The expensive part is now deciding whether code deserves to exist in your main branch.

Qodo’s data backs this up — when AI review is enabled, 80% of PRs have zero human comments. The human reviewer is already gone from most PRs. The question is whether you designed for that, or it just happened.

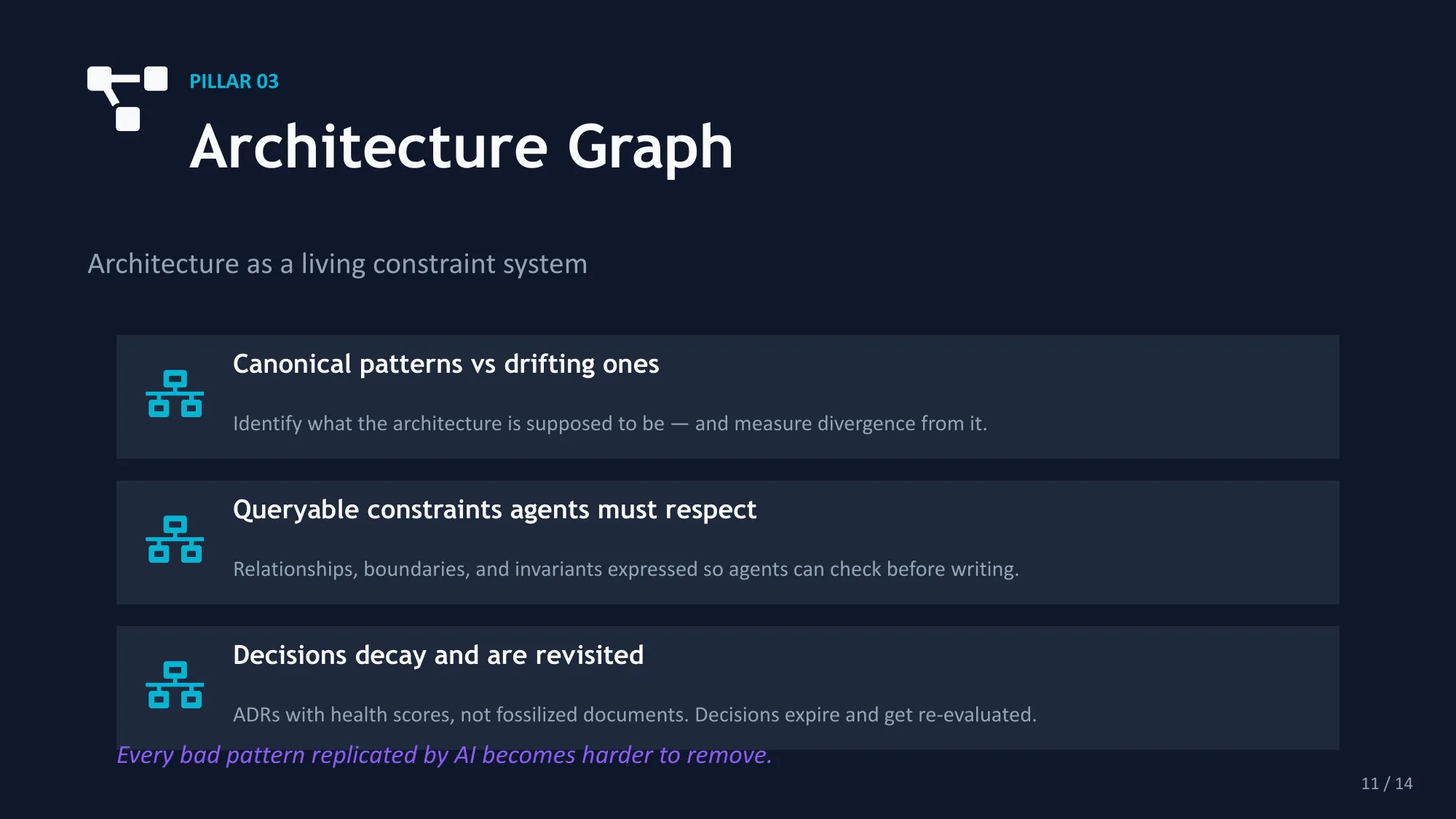

3. Architecture Graph

Architecture as a living constraint system, not a static document.

Every bad pattern replicated by AI becomes harder to remove. After enough repetition, the pattern is the architecture. That’s the compounding risk most teams miss.

The Architecture Graph identifies canonical patterns versus drifting ones and measures divergence. It expresses relationships, boundaries, and invariants in a form agents can query before they write code. And critically, it treats decisions as things that decay and need revisiting — ADRs with health scores, not fossilized documents.

Building Evolutionary Architectures calls these “architectural fitness functions” — objective integrity assessments of architectural characteristics. With agentic AI and MCP, we can finally solve the brittleness problem that made fitness functions impractical: agents express governance intent without coupling to implementation details.

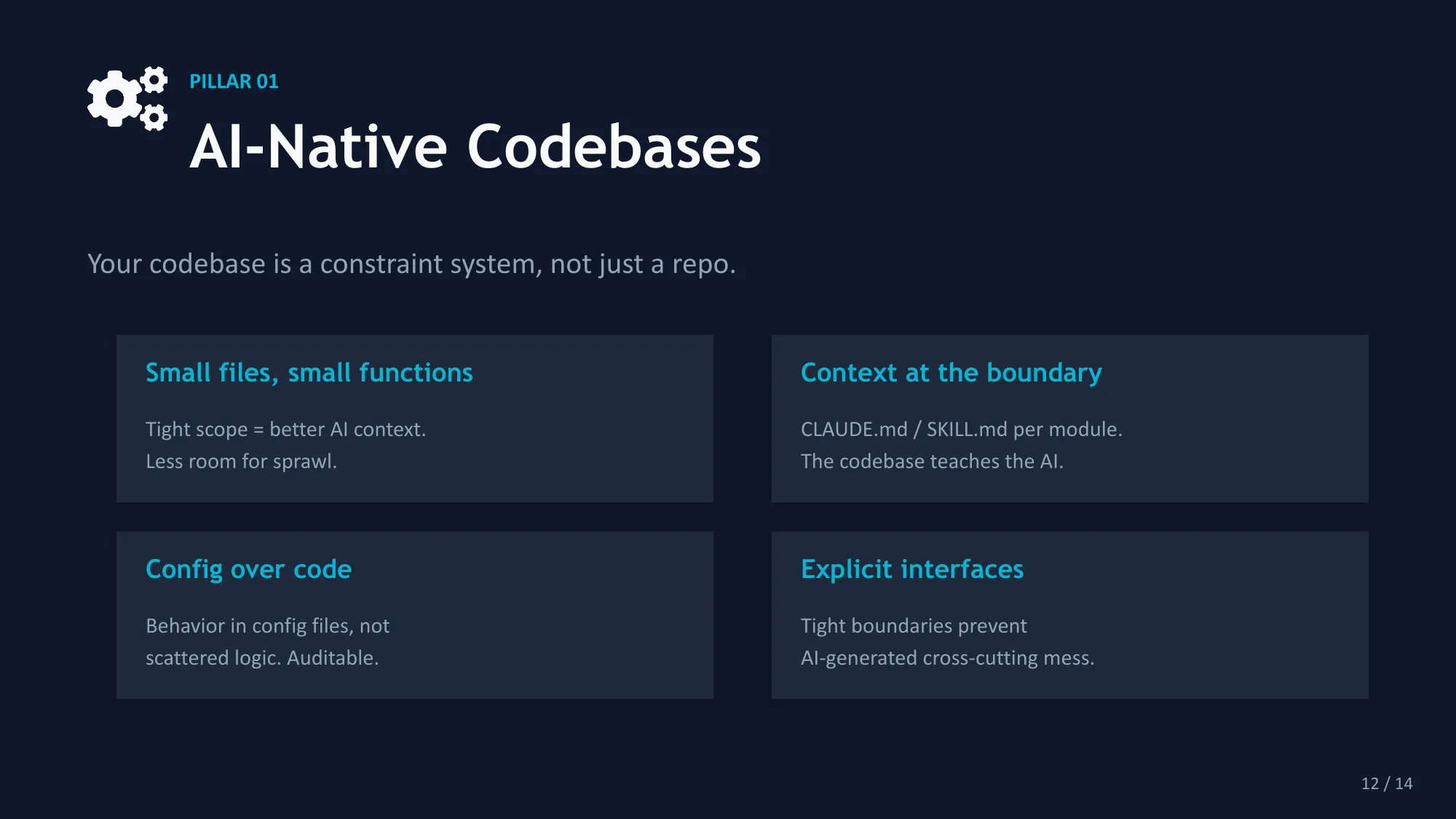

4. AI-Native Codebases

Your codebase is a constraint system, not just a repo. And it’s teaching the AI how to extend it — whether you want it to or not.

Design principles: small files and small functions (tight scope means better AI context and less room for sprawl), context at the module boundary (CLAUDE.md or SKILL.md per module — the codebase teaches the AI), config over code (behavior in config files, not scattered logic), and explicit interfaces (tight boundaries prevent AI-generated cross-cutting mess).

Every unclear boundary becomes a future cross-cutting mess. Every missing context file means the AI will infer conventions from whatever it sees first — which might be your worst code, not your best.

The Adaptive Principle

Don’t overfit the current model.

Tools change quarterly. Sonnet 3.5 to Opus 4.5 to Opus 4.6 — in twelve months. Each generation breaks different assumptions about what AI can and can’t do reliably.

Principles must survive years. Prove coherence, not correctness. Review intent, not implementation. Architecture is alive, not documented.

DORA’s 2024-2025 trajectory proves adaptive infrastructure works. In 2024, AI adoption correlated with stability decreases. By 2025, with 90% of developers using AI tools, throughput recovered. Same tools, better practices. The organizations that adapted their infrastructure saw gains. But stability remains unsolved — that’s the work still ahead.

Build systems that survive the next model you haven’t met yet.

Why This Matters for Leadership

This shows up quarters later, not immediately.

Teams that adapt recover throughput with stable delivery, contain AI-amplified tech debt early, and safely delegate more to AI agents. Teams that don’t follow a predictable arc: ship faster, degrade silently, stall later.

The strategic advantage is threefold: lower long-term engineering cost (prevent compounding debt instead of paying it down later), safer delegation to AI agents (governance guardrails that let you increase automation without increasing risk), and faster onboarding of mixed-skill contributors (the system enforces quality, not individual expertise).

10x engineers don’t scale. 10x systems do.

What To Do Monday

If you take one thing from this: audit your gauntlet. Run AI-generated code through your CI pipeline and look at what passes that shouldn’t. Those gaps define your Proving Ground.

Then: start requiring a one-paragraph intent description on every PR. Begin training the muscle of reviewing what, not how. Document your three most critical modules with context files that teach the AI how to extend them correctly. And find the pattern AI is most likely replicating wrong — fix it before it becomes the architecture.

The winners won’t be the teams with the smartest AI. They’ll be the teams whose infrastructure and processes adapt as fast as LLMs.

Slide Decks

Two versions of this talk are available below: a 14-slide technical deep dive for engineering teams, and an 8-slide executive summary.

Technical Version (14 slides)

Executive Summary (8 slides)